Understanding WebGPU

WebGPU is an API for interacting with the GPU, from the web browser. WebGPU does not exist as a program, but it is a standardized interface between the web browser and the GPU. Browsers have to implement WebGPU. In fact, web browsers can be largely thought of as bundles of implementations of web standards, for example HTML.

As software developers, we have a lot of ways to talk to the GPU. Most of the time, however, this is strictly dependant on what platform we are writing code for. Here is a simplified flowchart:

Let's imagine for a second that you are a browser vendor. You want to target all of these platforms, so you have to make and implement an API that turns the browser's GPU commands into the OS's GPU commands. You may notice that all of them (mostly) have OpenGL support. So you make WebGL as a wrapper over OpenGL.

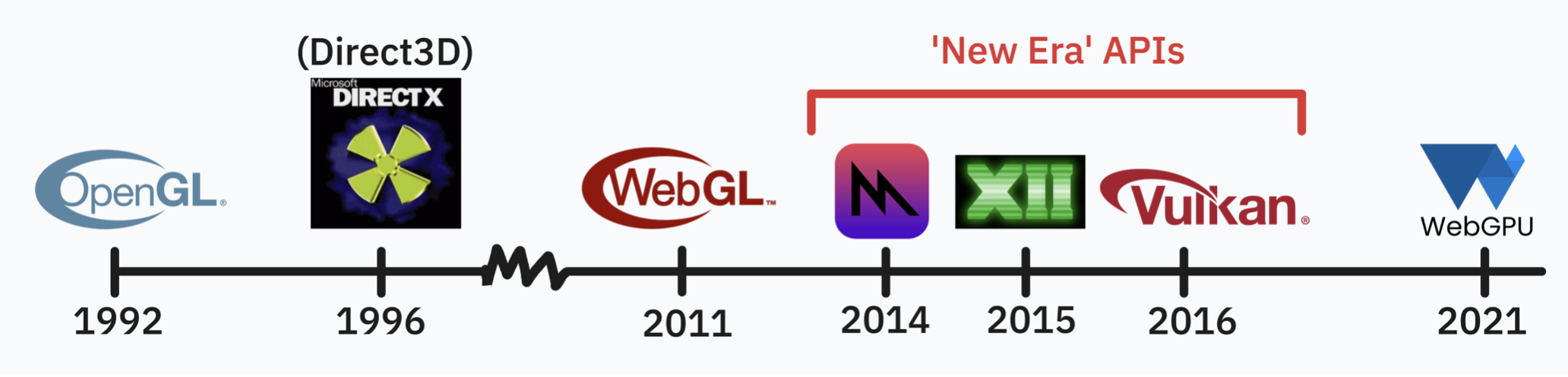

A short history lesson

WebGL is a simple idea: Take OpenGL ES, a subset of OpenGL, and surface the API in the browser. When a user makes a call, just use the OS's OpenGL. This is what the four big browser engines (Opera, Chrome, Firefox, Safari) did in 2011.

The big conflict here is that just a few years later, OpenGL was set to be replaced. The key change between these new APIs and OpenGL was the idea of "low driver overhead." The idea is that by making more of an effort to conform to how the GPU is structured, you can talk to the GPU using less work on the CPU. In practice, this means you have to set up a lot of things before you can start GPU work.

As part of this replacement, Apple deprecated OpenGL in 2018. This raises some questions over how WebGL works, right? The entire point of it was to be a wrapper over a now deprecated API. Thankfully Chrome thought of this, all the way in 2010! They created the ANGLE project to translate OpenGL ES to other graphics APIs. Nowadays, WebGL support looks like this:

A Better Way

Opening up these new APIs to the browser directly was the obvious next step. However, we need to solve the mismatch between what the browser wants and what the operating system wants. Lets say, for example, we pick Vulkan as the graphics API for the browser. Great! But now we have to solve how to run Vulkan on macOS. Because the people who make the browsers (Google, Apple, Mozilla) are mostly the same people who made the operating systems (Google, Apple, Microsoft) they would have a hard time picking a favorite API out of the current available ones. To solve this, a new API had to be made. And that's what WebGPU is.

The sad part about this is that we basically need another ANGLE to turn WebGPU into native OS APIs. But because WebGPU was designed for this, it maps much closer to current APIs than WebGL does. That makes developing a new ANGLE easier, and the closer the "mapping" is to the native API, the less performance is lost. Here's what those mappings look like:

What This Means For GPU Programming

Essentially, WebGPU is not bound to the web browser. It is the new "universal standard" for talking to the GPU. This has many possible applications. Let's say you're making a game engine, for example. You want to target every system possible, because you don't want to lock down game developers to using, say, Linux on Desktop only. If you were to use WebGPU instead of another graphics API, you would only have to write your rendering code once!

This isn't really a hypothetical. Today, you can use WGPU to talk to the GPU natively on any system. That's why I'm so excited about WebGPU.